45 pytorch dataloader without labels

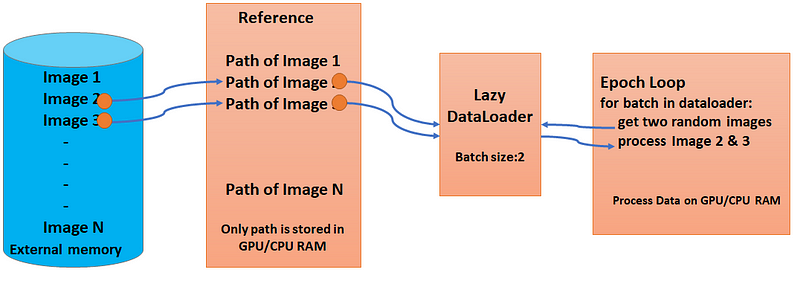

PyTorch Dataloader + Examples - Python Guides In this section, we will learn about How PyTorch dataloader can add dimensions in python. The dataloader in PyTorch seems to add some additional dimensions after the batch dimension. Code: In the following code, we will import the torch module from which we can add a dimension. DataLoader without dataset replica · Issue #2052 · pytorch/pytorch · GitHub - ah I'm sorry. I just realized that it might actually be getting pickled - in such case there are two options: 1. make the numpy array mmap a file <- the kernel will take care of everything for you and won't duplicate the pages 2. use a torch tensor inside your dataset and call .share_memory_() before you start iterating over the data loader

PyTorch: Train without dataloader (loop trough dataframe instead) Create price matrix from tidy data without for loop. 20. Loading own train data and labels in dataloader using pytorch? 0. Can pytorch / keras support dataloader object of Image and Text? 3. Python: Fast indexing of strings in nested list without loop. 1. pytorch __init__() got an unexpected keyword argument 'train' 0.

Pytorch dataloader without labels

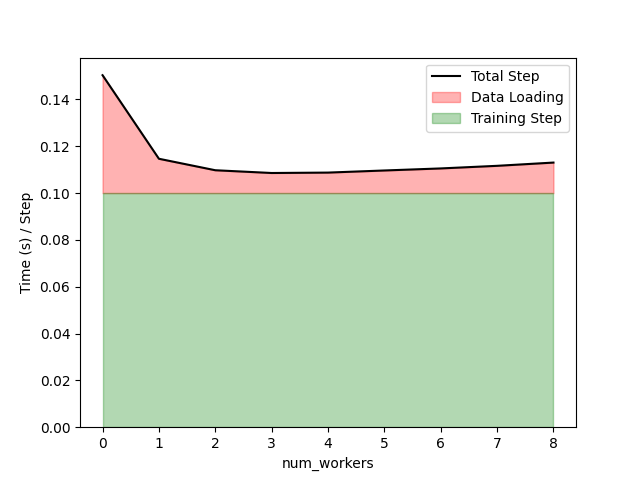

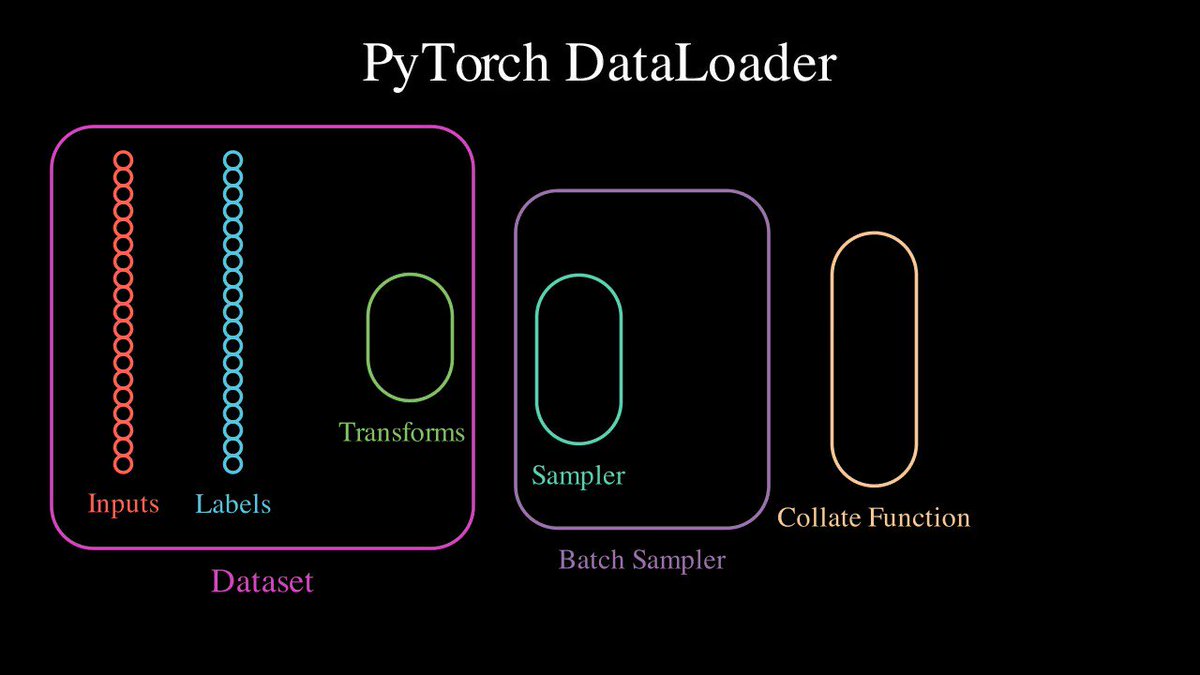

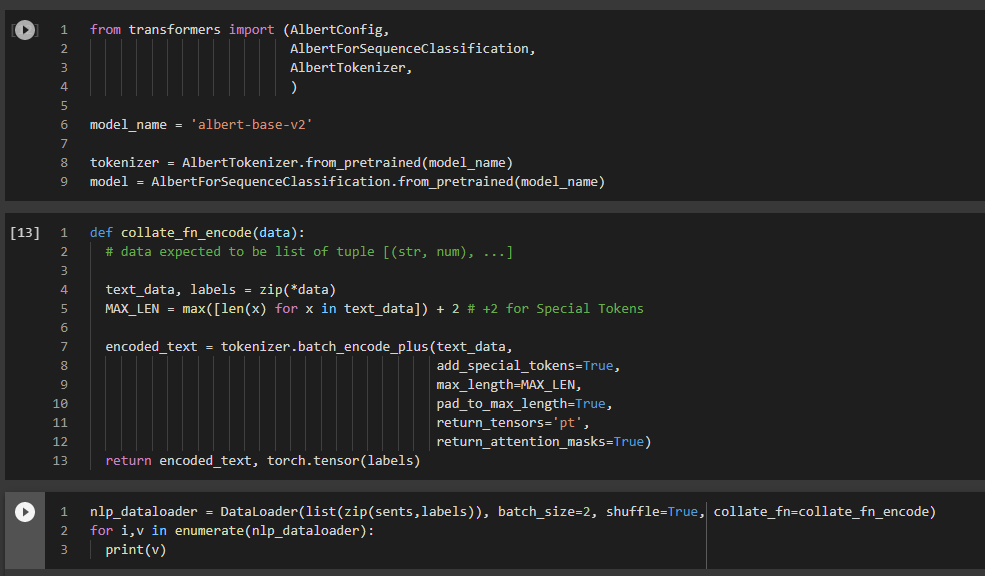

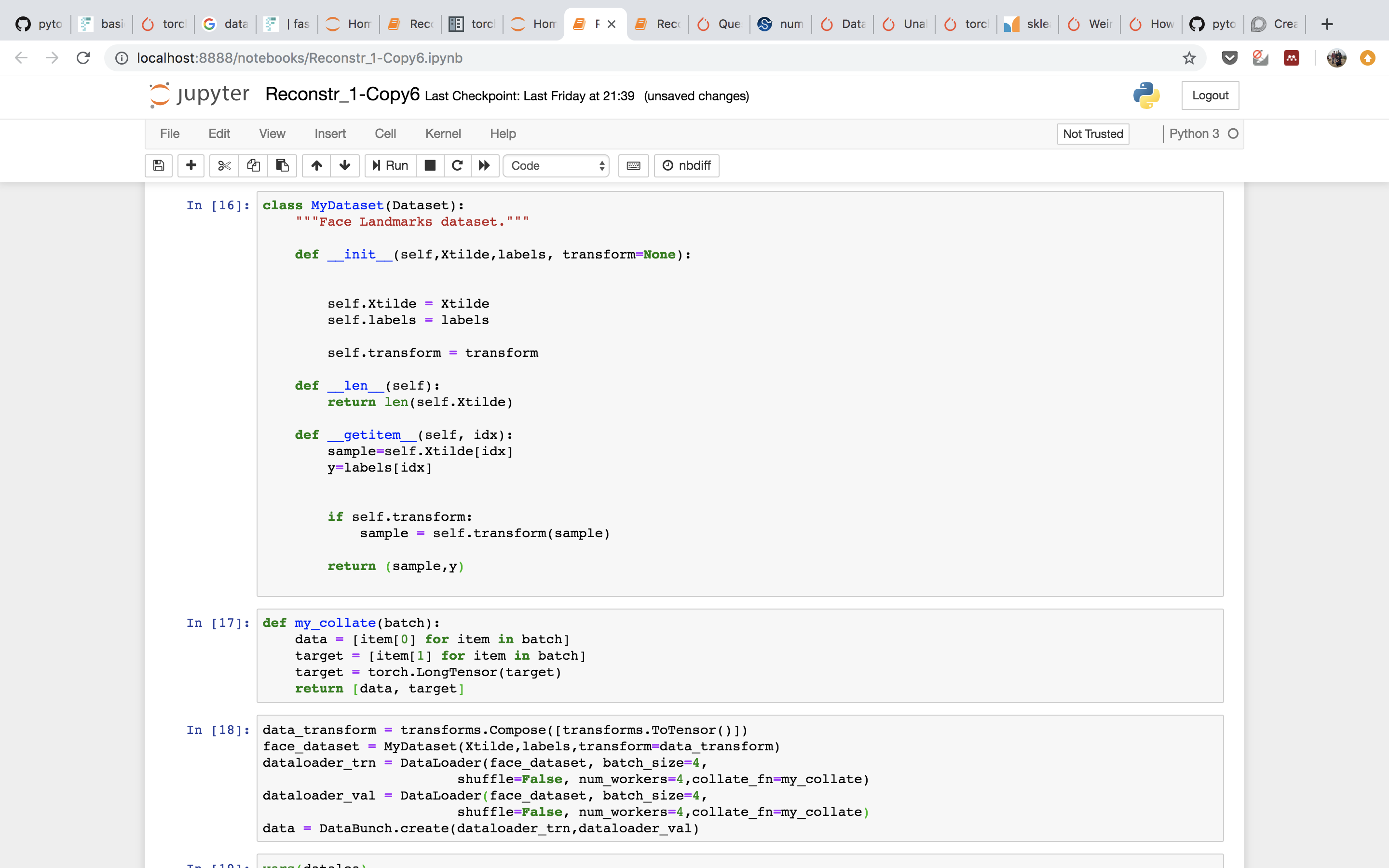

Loading own train data and labels in dataloader using pytorch? # create a dataset like the one you describe from sklearn.datasets import make_classification x,y = make_classification () # load necessary pytorch packages from torch.utils.data import dataloader, tensordataset from torch import tensor # create dataset from several tensors with matching first dimension # samples will be drawn from the first … stackoverflow.com › questions › 65279115python - How to use 'collate_fn' with dataloaders? - Stack ... Dec 13, 2020 · DataLoader(toy_dataset, collate_fn=collate_fn, batch_size=5) With this collate_fn function, you always gonna have a tensor where all your examples have the same size. So, when you feed your forward() function with this data, you need to use the length to get the original data back, to not use those meaningless zeros in your computation. Load Pandas Dataframe using Dataset and DataLoader in PyTorch. Then, the file output is separated into features and labels accordingly. Finally, we convert our dataset into torch tensors. Create DataLoader. To train a deep learning model, we need to create a DataLoader from the dataset. DataLoaders offer multi-worker, multi-processing capabilities without requiring us to right codes for that.

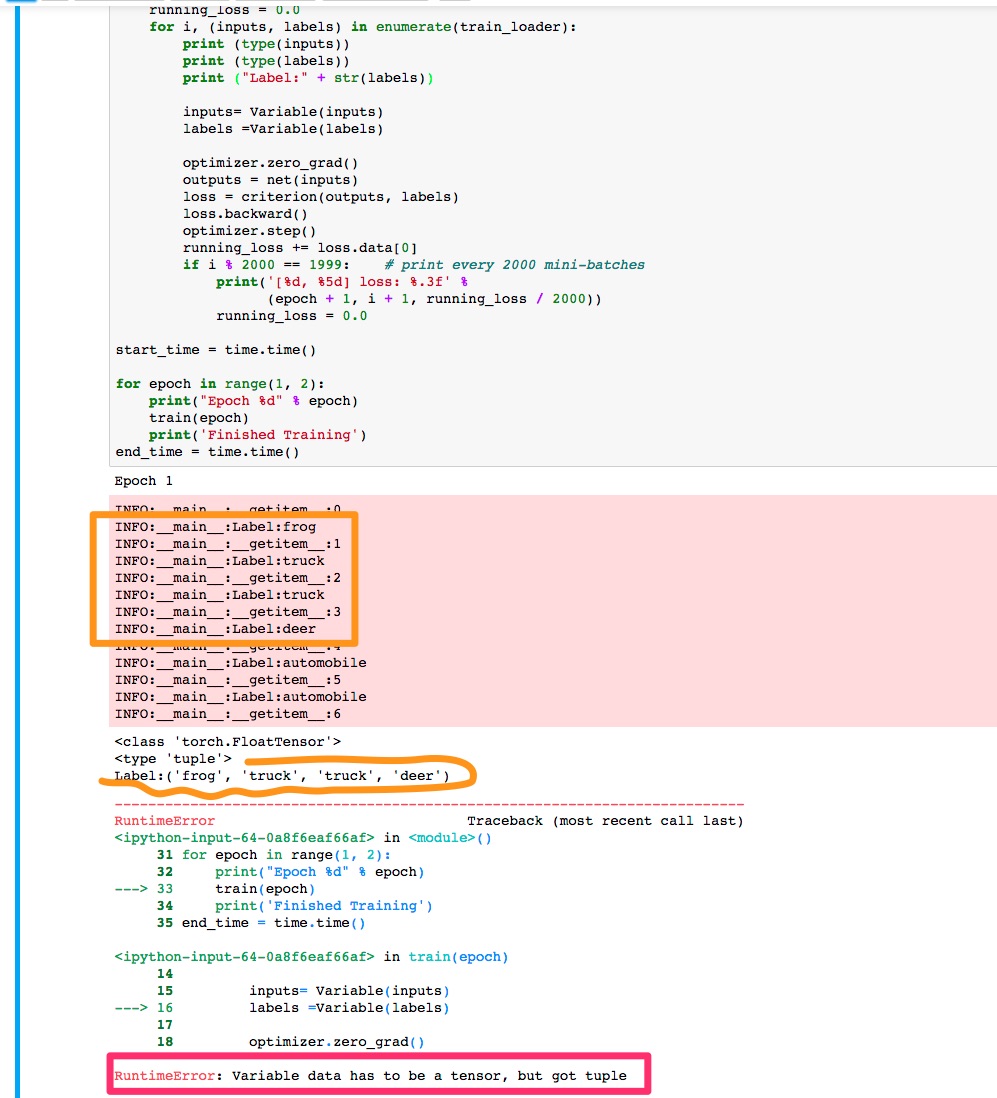

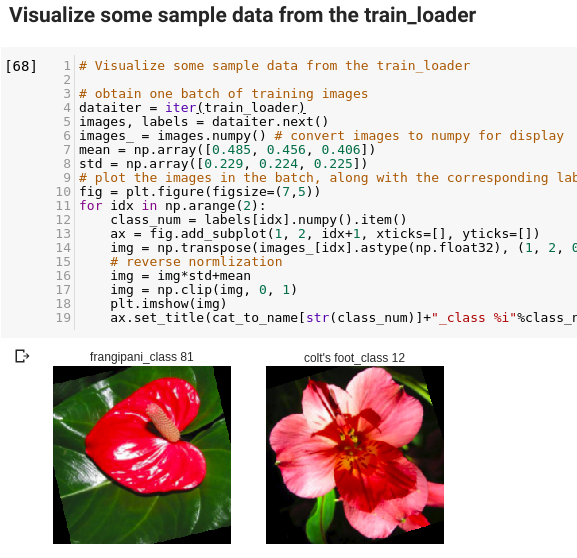

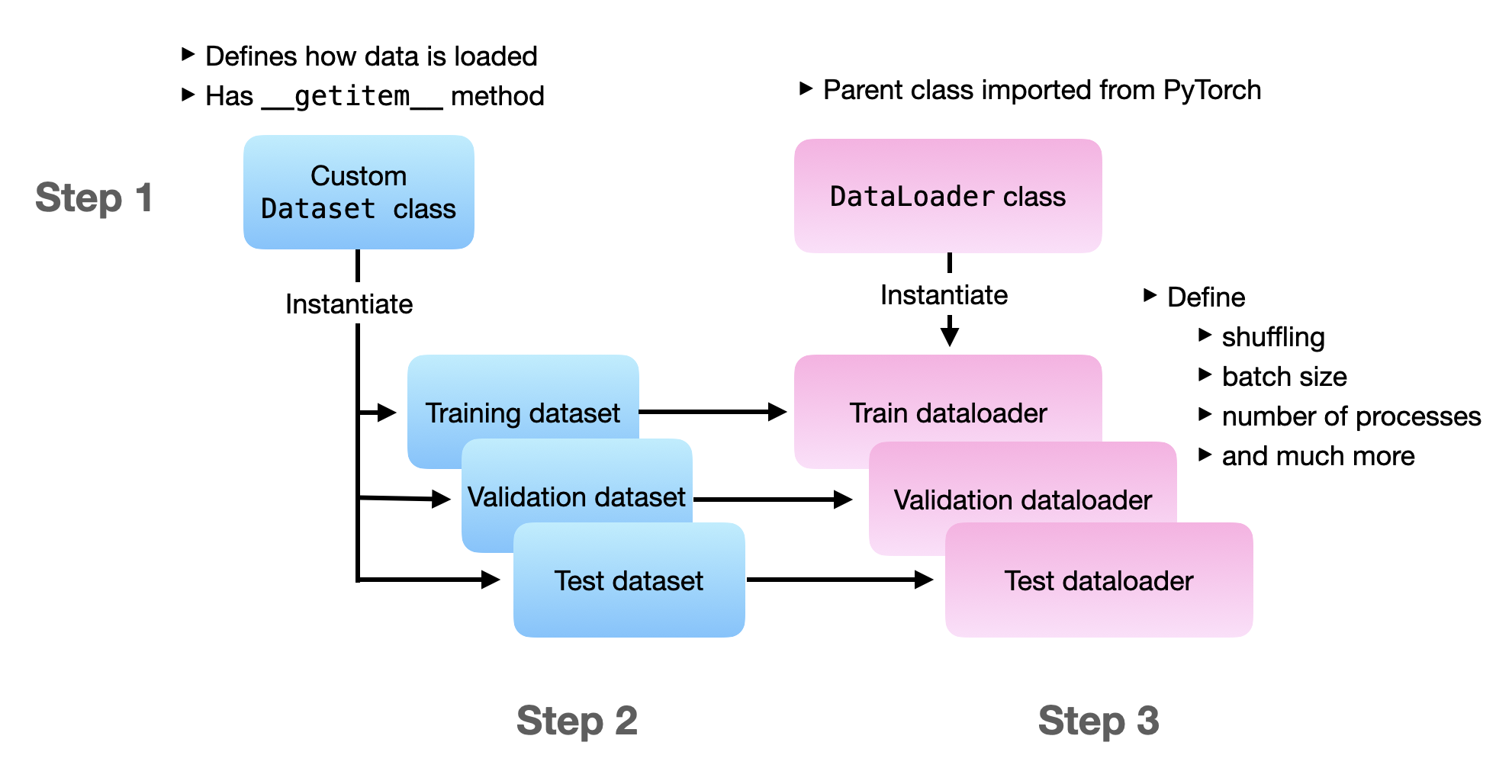

Pytorch dataloader without labels. Iterating through DataLoader using iter() and next() in PyTorch Our DataLoader would process the data, and return 8 batches of 4 images each. The Dataset class is an abstract class representing the dataset. It allows us to treat the dataset as an object of a class, rather than a set of data and labels. Dataset class returns a pair of [input, label] every time it is called. torch.utils.data — PyTorch 1.12 documentation At the heart of PyTorch data loading utility is the torch.utils.data.DataLoader class. It represents a Python iterable over a dataset, with support for. map-style and iterable-style datasets, customizing data loading order, automatic batching, single- and multi-process data loading, automatic memory pinning. These options are configured by the ... Creating a dataloader without target values - PyTorch Forums I am trying to create a dataloader that will return batches of input data that doesn't have target data. Here's what I am doing: torch_input = torch.from_numpy (x_train) torch_target = torch.from_numpy (y_train) ds_x = torch.utils.data.TensorDataset (torch_input) ds_y = torch.utils.data.TensorDataset (torch_target) train_loader = torch ... Beginner's Guide to Loading Image Data with PyTorch Create a DataLoader The following steps are pretty standard: first we create a transformed_dataset using the vaporwaveDataset class, then we pass the dataset to the DataLoader function, along with a few other parameters (you can copy paste these) to get the train_dl. batch_size = 64 transformed_dataset = vaporwaveDataset (ims=X_train)

How to load Images without using 'ImageFolder' - PyTorch Forums Transforms can be leveraged, but aren't required. The sample code above should work… Just pass in your image folder path when you instantiate the DataSet. ex: my_dataset = CustomDataSet ("path/to/root/", transform=your_transforms) If you aren't using transforms, remove the 3 references to transform in your CustomDataSet code. 1 Like pytorch-geometric.readthedocs.io › en › latesttorch_geometric.loader — pytorch_geometric documentation DataLoader. A data loader which merges data objects from a torch_geometric.data.Dataset to a mini-batch.. NodeLoader. A data loader that performs neighbor sampling from node information, using a generic BaseSampler implementation that defines a sample_from_nodes() function and is supported on the provided input data object. Data loader without labels? - PyTorch Forums Is there a way to the DataLoader machinery with unlabeled data? PyTorch Forums. Data loader without labels? cossio January 19, 2020, 6:03pm #1. Is there a way to the DataLoader machinery with unlabeled data? ptrblck January 20, 2020, 2:11am #2. Yes, DataLoader doesn ... Custom Dataset and Dataloader in PyTorch - DebuggerCafe testloader = DataLoader(test_data, batch_size=128, shuffle=True) In the __init__ () function we initialize the images, labels, and transforms. Note that by default the labels and transforms parameters are None. We will pass them as arguments depending on our requirements for the project.

Image Data Loaders in PyTorch - PyImageSearch A DataLoader accepts a PyTorch dataset and outputs an iterable which enables easy access to data samples from the dataset. On Lines 68-70, we pass our training and validation datasets to the DataLoader class. A PyTorch DataLoader accepts a batch_size so that it can divide the dataset into chunks of samples. PyTorch DataLoader Quick Start - Sparrow Computing PyTorch Dataset objects are very flexible — they can return any kind of tensor(s) you want. But supervised training datasets should usually return an input tensor and a label. For illustration purposes, let's create a dataset where the input tensor is a 3×3 matrix with the index along the diagonal. The label will be the index. Loading data in PyTorch — PyTorch Tutorials 1.12.1+cu102 documentation 3. Loading the data. Now that we have access to the dataset, we must pass it through torch.utils.data.DataLoader. The DataLoader combines the dataset and a sampler, returning an iterable over the dataset. data_loader = torch.utils.data.DataLoader(yesno_data, batch_size=1, shuffle=True) 4. Iterate over the data. Datasets & DataLoaders — PyTorch Tutorials 1.12.1+cu102 documentation PyTorch provides two data primitives: torch.utils.data.DataLoader and torch.utils.data.Dataset that allow you to use pre-loaded datasets as well as your own data. Dataset stores the samples and their corresponding labels, and DataLoader wraps an iterable around the Dataset to enable easy access to the samples.

github.com › pytorch › pytorchBrokenPipeError: [Errno 32] Broken pipe #2341 - GitHub Aug 08, 2017 · And I just made some PyTorch forum posts regarding this. The problem lies with Python's multiprocessing and Windows. Please see this PyTorch discussion reply as I don't want to overly copy paste stuff here. Edit: Here's the code that doesn't crash, which at the same time complies with Python's multiprocessing programming guidelines for Windows ...

Issue with DataLoader with lr_finder.range_test #71 - GitHub Because inputs_labels_from_batch() was designed to avoid users modifying their existing code of dataset/data loader. You can just implement your logic inside it. And just note that you have to make sure the returned value of inputs_labels_from_batch() have to be 2 array-like objects, just like the line 41 shows:

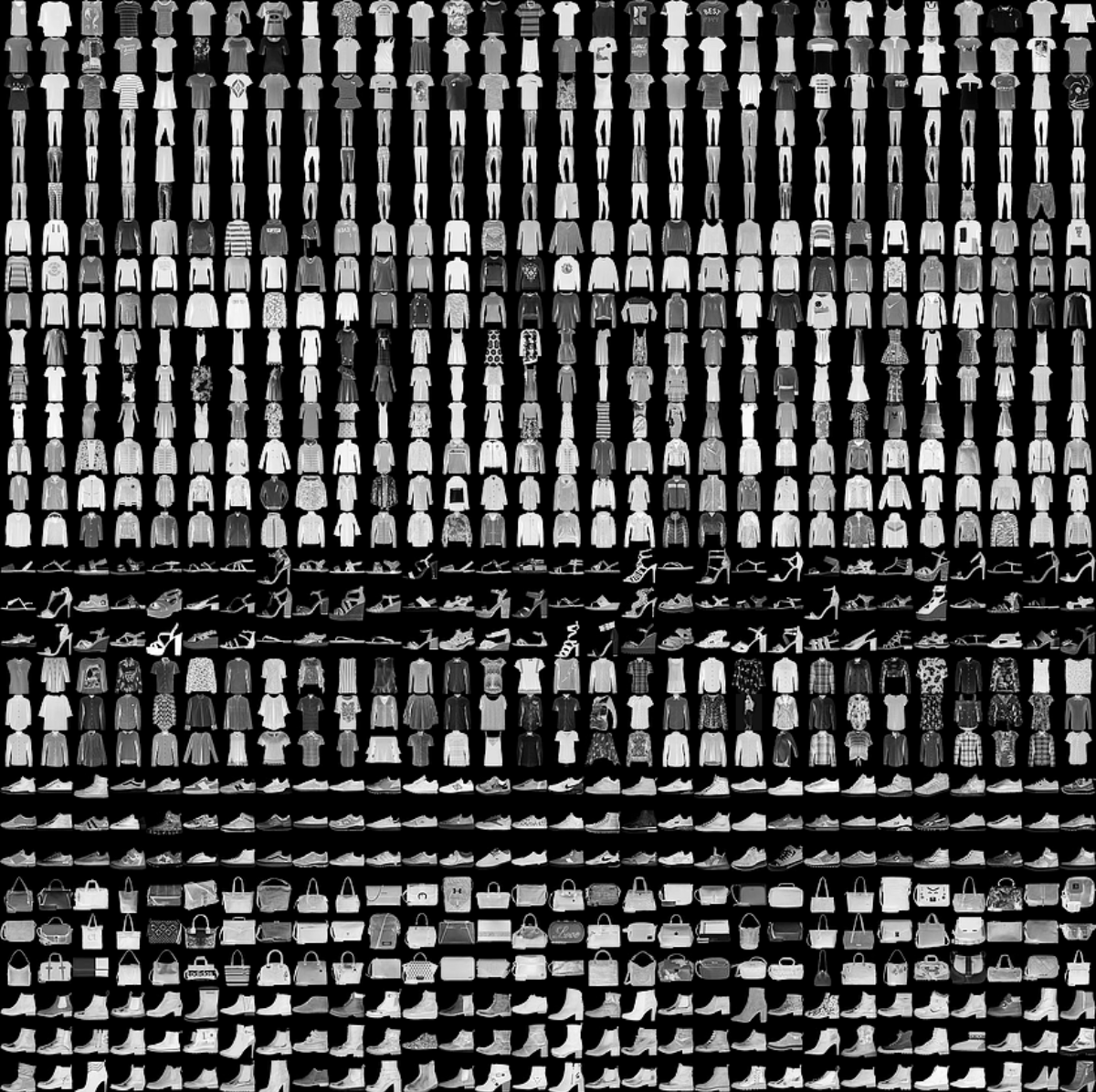

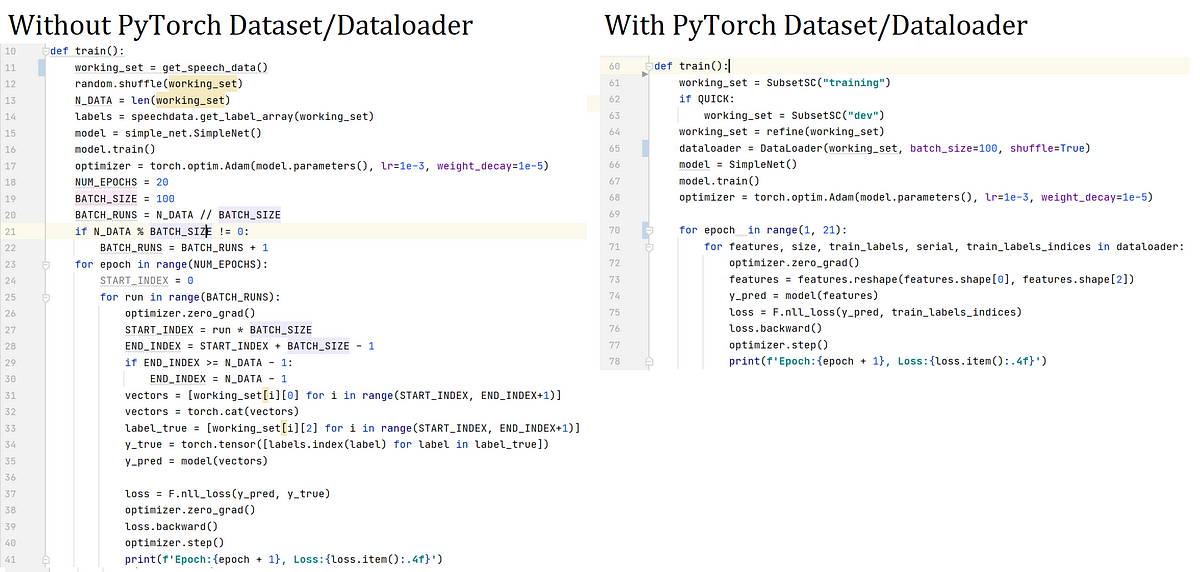

Writing Custom Datasets, DataLoaders and Transforms - PyTorch Writing Custom Datasets, DataLoaders and Transforms. A lot of effort in solving any machine learning problem goes into preparing the data. PyTorch provides many tools to make data loading easy and hopefully, to make your code more readable. In this tutorial, we will see how to load and preprocess/augment data from a non trivial dataset.

DataLoader returns labels that do not exist in the DataSet When I pass this dataset to a DataLoader (with or without a sampler) it returns labels that are outside the label set, for example 112, 105 etc… I am very confused as to how this is happening as I tried to simplify things as much as possible and it still happens.

› guides › image-classificationImage Classification with PyTorch | Pluralsight Apr 01, 2020 · Dataset and Dataloader, PyTorch's data loading utility 1 import pandas as pd 2 import matplotlib . pyplot as plt 3 import torch 4 import torch . nn . functional as F 5 import torchvision 6 import torchvision . transforms as transforms 7 8 from torch . utils . data import Dataset , DataLoader 9 from sklearn . model_selection import train_test ...

› ranjiewen › ppytorch之dataloader深入剖析 - ranjiewen - 博客园 首先简单介绍一下DataLoader,它是PyTorch中数据读取的一个重要接口,该接口定义在dataloader.py中,只要是用PyTorch来训练模型基本都会用到该接口(除非用户重写…),该接口的目的:将自定义的Dataset根据batch size大小、是否shuffle等封装成一个Batch Size大小的Tensor ...

Create a pyTorch testing Dataset (without labels) - Stack Overflow This works well for my training data, but I get an error ( KeyError: " ['label'] not found in axis") when loading the testing csv file, which is identical other than there being no "label" column. If it helps, the intended input csv file is MNIST data in csv file which has 28*28 feature columns.

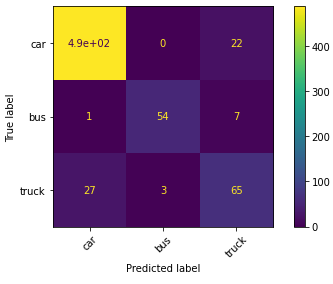

Multilabel Classification With PyTorch In 5 Minutes Our custom dataset and the dataloader work as intended. We get one dictionary per batch with the images and 3 target labels. With this we have the prerequisites for our multilabel classifier. Custom Multilabel Classifier (by the author) First, we load a pretrained ResNet34 and display the last 3 children elements.

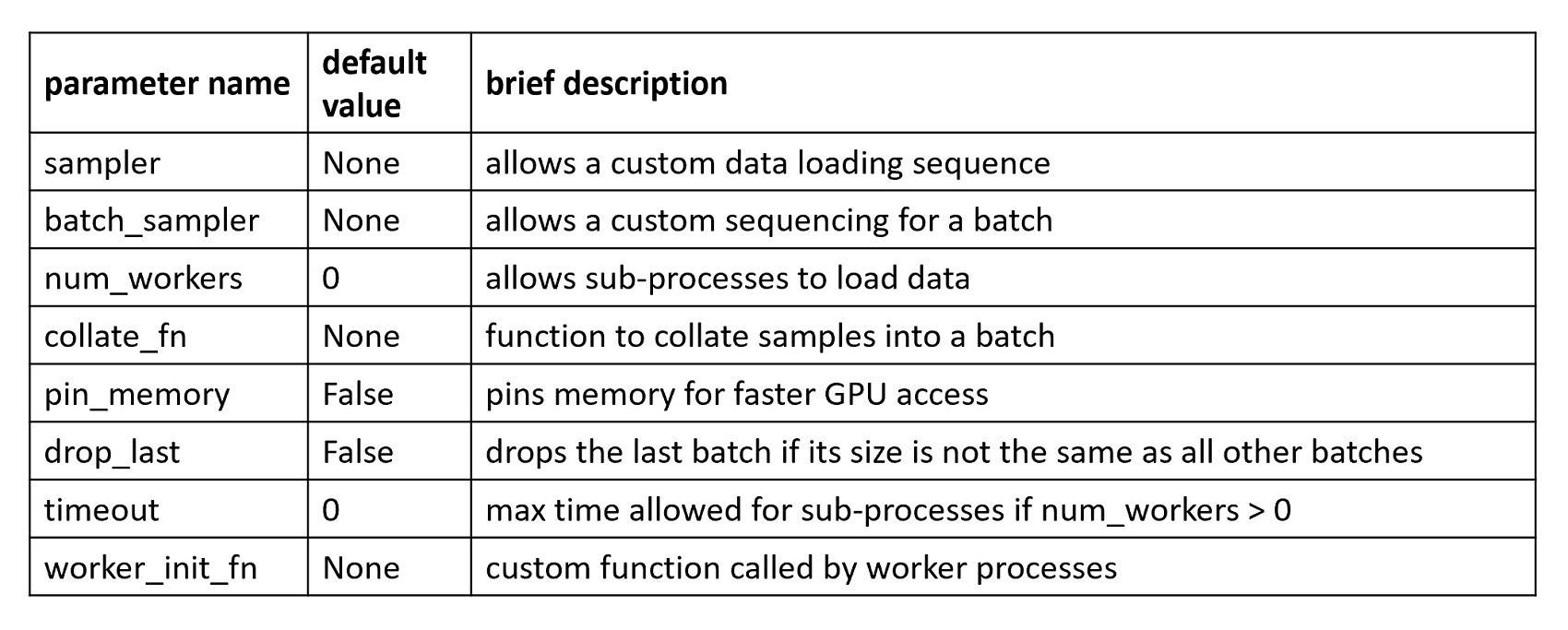

stanford.edu › ~shervine › blogA detailed example of data loaders with PyTorch PyTorch script. Now, we have to modify our PyTorch script accordingly so that it accepts the generator that we just created. In order to do so, we use PyTorch's DataLoader class, which in addition to our Dataset class, also takes in the following important arguments: batch_size, which denotes the number of samples contained in each generated batch.

Training with PyTorch — PyTorch Tutorials 1.12.1+cu102 documentation It enumerates data from the DataLoader, and on each pass of the loop does the following: Gets a batch of training data from the DataLoader. Zeros the optimizer's gradients. Performs an inference - that is, gets predictions from the model for an input batch. Calculates the loss for that set of predictions vs. the labels on the dataset

towardsdatascience.com › how-to-use-datasets-andHow to use Datasets and DataLoader in PyTorch for custom text ... TD = CustomTextDataset (text_labels_df ['Text'], text_labels_df ['Labels']): This initialises the class we made earlier with the 'Text' and 'Labels' data being passed in. This data will become 'self.text' and 'self.labels' within the class. The Dataset is saved under the variable named TD. The Dataset is now initialised and ready to be used!

Using ImageFolder without subfolders/labels - PyTorch Forums PyTorch Forums Using ImageFolder without subfolders/labels vision ywang530 (Ywang530) January 23, 2020, 6:15am #1 I am working on a image classification project right now. I have a train dataset consists of 102165 png files of different instruments. I have only a train folder which contains all the image files as the above screenshot shows.

anndata DataLoader for pyTorch without DistributedSampler #757 - GitHub I have been trying to implement an MLP to predict cell type labels using pyTorch Lightning and the AnnLoader function. For the implementation, I followed the AnnLoader tutorial to interface with pyTorch models and the PyTorch Lightning tutorial. I aim to implement the training, test and prediction methods, and run it on a GPU.

Creating a custom Dataset and Dataloader in Pytorch A dataloader in simple terms is a function that iterates through all our available data and returns it in the form of batches. For example if we have a dataset of 100 images, and we decide to batch...

Load Pandas Dataframe using Dataset and DataLoader in PyTorch. Then, the file output is separated into features and labels accordingly. Finally, we convert our dataset into torch tensors. Create DataLoader. To train a deep learning model, we need to create a DataLoader from the dataset. DataLoaders offer multi-worker, multi-processing capabilities without requiring us to right codes for that.

stackoverflow.com › questions › 65279115python - How to use 'collate_fn' with dataloaders? - Stack ... Dec 13, 2020 · DataLoader(toy_dataset, collate_fn=collate_fn, batch_size=5) With this collate_fn function, you always gonna have a tensor where all your examples have the same size. So, when you feed your forward() function with this data, you need to use the length to get the original data back, to not use those meaningless zeros in your computation.

Loading own train data and labels in dataloader using pytorch? # create a dataset like the one you describe from sklearn.datasets import make_classification x,y = make_classification () # load necessary pytorch packages from torch.utils.data import dataloader, tensordataset from torch import tensor # create dataset from several tensors with matching first dimension # samples will be drawn from the first …

![PDF] PyTorch Metric Learning | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/1e0b3982e7f9ceeeb7fe676d8791e240700ca3da/1-Figure1-1.png)

Post a Comment for "45 pytorch dataloader without labels"